Read the Gartner® Competitive Landscape: Network Detection and Response Report

Read the Gartner® Competitive Landscape: Network Detection and Response Report

START HERE

WHY CORELIGHT

SOLUTIONS

CORELIGHT LABS

Close your ransomware case with Open NDR

SERVICES

ALLIANCES

USE CASES

Find hidden attackers with Open NDR

Corelight announces cloud enrichment for AWS, GCP, and Azure

Corelight's partner program

10 Considerations for Implementing an XDR Strategy

Black Hat NOC: Zero Trust…but Verify | Corelight

July 8, 2024 by Mark Overholser

Zero Trust…but Verify

The Black Hat network is unlike an enterprise network. The network operations center (NOC), which Corelight helps to operate, sees traffic that would never be permissible on most enterprise networks. Still, in many ways the Black Hat network is a microcosm of many real-world environments, with similar challenges that require similar solutions. This is why the Black Hat team partners with enterprise technologies to build, maintain, monitor, and protect their network; not only are they best-in-class, they’re well suited to the tasks we in the NOC face during the conference.

The Black Hat NOC Management strives to follow Zero Trust principles when designing and implementing the network. This means adhering to the principle of least privilege and lots of segmentation and authentication, at least where Black Hat has control or influence over the endpoints involved. (A safe assumption is that most attendees would not attend if the conference organizers insisted on the installation of an endpoint agent for the duration of the conference.)

At a practical level, this means:

- Logically separating the various networks

- Using host isolation to isolate devices from each other, even when on the same network segment

- Only allowing cross-boundary communication when there is a specific business requirement or justification

One might think that if you’ve done what you can to separate and authenticate your users and devices, that you’re done, and have nothing to fear anymore. Why do we continue to monitor the Black Hat network as deeply as we do, then?

We’re glad you asked! NIST SP 800-207 section 2.1 (“Tenets of Zero Trust”) explicitly states seven tenets of a Zero Trust architecture, the last of which is:

The enterprise collects as much information as possible about the current state of assets, network infrastructure, and communications and uses it to improve its security posture.

If putting up the walls in all the right places is sufficient to keep out adversaries and keep up operations, there would be no need to keep watch. Why is NIST specifically reminding us that even with a Zero Trust architecture we should continue to remain vigilant and watch over our networks?

Humans are at the heart of everything

Even though we believe that computers are at the heart of our networks, computers and the software that run on them are still made by humans. Even the “software” which is “written” by LLMs today is still just an amalgamation of code snippets written by other humans.

One constant in human behavior is that we make mistakes. We write code that is syntactically accurate, looks good and still somehow doesn’t accurately adhere to the original specification. We go to lunch telling ourselves we’ll commit the staged firewall rule changes when we get back, and forget to come back to it. We draft a plan for how to configure a switch and forget about disabling this option or enabling that one. Oops.

So, if we’re smart, we accept our fallible nature. We don’t trust everything was done right the first time: We look back and verify it.

Security analysts might think, “By design, this network shouldn’t be able to talk to that network. If somehow, they were able to talk to each other, we should see evidence in the logs here and it would look like this.” Then the analysts would search the relevant logs for that evidence. If it’s found, the analysts can open an incident and follow through; if not it’s time to move on to the next hunt.

This is an example of a hypothesis-based threat hunt that can validate proper implementation of a Zero Trust Architecture. And it’s based on an actual hunt from our time in the Black Hat NOC, in which we discovered some misconfigured devices in the conference network at a past conference (you can dive into the details provided by my colleague Dustin Lee in this blog post.) Experience has made us into adherents for “Zero Trust…but verify.”

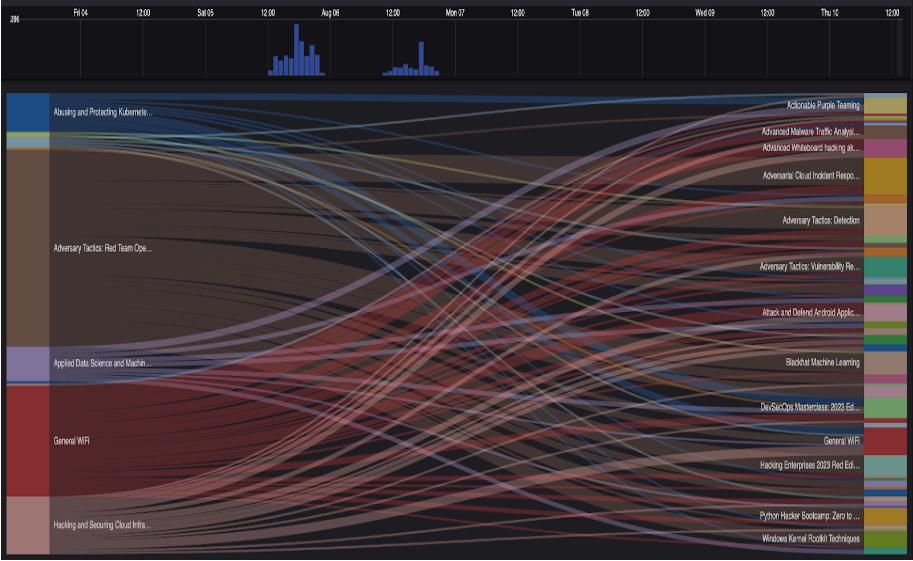

Hint: The lines mean that some network boundaries that were supposed to be working…weren’t. We caught it because we were watching.

Sometimes the mistake isn’t a mistake

Misconfigurations are just one of the failure modes that can expose a crack in a Zero Trust Architecture. Another is malicious intent. Recently, someone (or a group of someones) succeeded in getting malicious, backdoored code merged into xz utils, and that code was days away from wider distribution in major, trusted Linux distributions before it was miraculously caught by one individual. If the bad actor(s) had been successful, they would have gained remote, unauthenticated access to wide swaths of systems with SSH exposed to the internet–and then access to any other systems that those systems had access to.

A supply-chain attack of this magnitude highlights a huge vulnerability in the overall system: When you choose to trust a vendor or supplier (commercial or open source), you are implicitly trusting every one of their vendors, every software project they depend on, and every individual that touches any part of it.

This vulnerability is brilliantly and concisely demonstrated in Randall Munroe’s XKCD comic #2347:

Reference: https://xkcd.com/2347/

The XZ Utils supply chain attack is just one example of a supply chain vulnerability that almost caused widespread havoc in many organizations. Other recent examples include PolyFill, Log4Shell, SolarWinds/Sunburst, and Heartbleed.

The moral: Nobody knows what is going to be the next big vulnerability in some component of a software supply chain, or when it will come. Therefore, we should all be preparing now for a disruption that almost certainly will occur when we least expect it.

Having a Zero Trust Architecture in place will help mitigate the risk, and having good network monitoring in place will ensure that teams will be able to definitively say whether they were affected, to what extent, and whether their countermeasures were effective.

How do I monitor thee? Let me count the ways.

In the Black Hat NOC, we take the threat of a sudden strike seriously. Even though we have done our best to implement robust segmentation and authentication, we still watch, in several ways.

First, we designed custom dashboards for monitoring the critical assets to visualize their activity over time. This makes it easy for us to understand the baseline behaviors and watch for any changes. This is a measure almost any team can accomplish, assuming they have access to reliable data/metadata about what assets are doing on the network, so it’s a natural starting point.

Second, we have taken advantage of the extensibility of the Zeek and Suricata tooling that underpins the platform by designing custom detections and alerts. With Suricata, we designed IDS rules that look for sensitive actions specific to the Black Hat network (e.g., detecting proprietary data in places where we don’t expect to see it). With Zeek’s built-in scripting language, we constructed more powerful detection logic to look for more complex behaviors we don’t expect to see on the Black Hat network but want to be alerted to. Building Suricata rules and custom Zeek scripts requires sophistication, skills and experience some teams may need time to develop. However, being able to tailor your detection logic to your own network and assets is extremely valuable, because an adversary won’t be able to predict and evade the defenses in place.

Finally, we take advantage of the detailed data that Corelight sensors provide to feed machine learning (ML) models. This allows us to cluster the assets based on behaviors in a number of ways, look for patterns and outliers and proactively investigate assets before they exhibit clear signs of compromise. This technique requires personnel with data science and information security cross-functional experience, so it’s another high bar to surmount. But it’s a worthy aspiration since it adds many more opportunities for detection if done properly.

Example of some of the clustering analysis on the infrastructure at Black Hat.

Conclusion

Every organization should be working towards a Zero Trust Architecture in all parts of their network: It’s a critical piece of the adversary-prevention-and-detection puzzle. However, maintaining Zero Trust requires teams to be proactive and observant, so that organizations can notice and understand where, how and why their implementation falls short of their plans. Monitoring also provides organizations with the opportunity to detect adversaries or malicious insiders that may be attempting to work through the maze of prevention mechanisms in place.

The visibility provided by Corelight’s Open NDR makes it an ideal choice for defenders to augment their Zero Trust implementation while its flexibility allows teams to customize detections to their specific network design. It’s an essential component of a layered defense strategy that adversaries can’t see.

Tagged With: NDR, DevSecOps, BlackHat, NOC, Operational Technology

Recent Posts