Light in the darkness: New Corelight Encrypted Traffic Collection

Version 18 of our software features the Encrypted Traffic Collection which focuses on SSH, SSL/TLS certificates and insights into encrypted network...

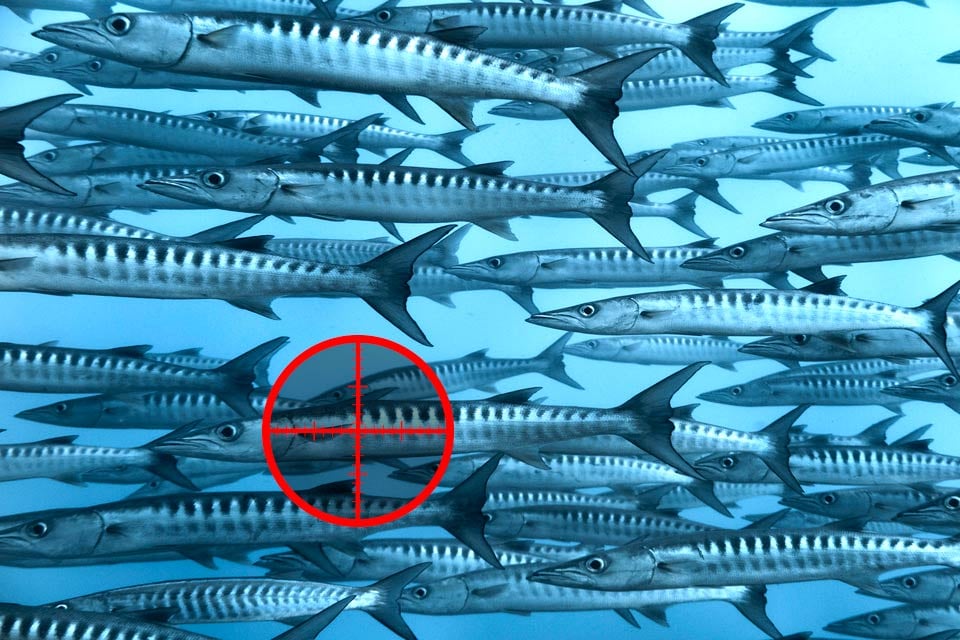

Some of the most costly security compromises that enterprises suffer manifest as tiny trickles of behavior hidden within an ocean of other site activity. Finding such incidents, and unraveling their full scope once detected, requires far-ranging network visibility, such as provided by Corelight Sensors, or, more broadly, the open-source Bro system at the heart of our appliances.

Wading through vast amounts of data to find genuine threats can prove intractable without aids for accelerating the process. An important technique for enabling detection is the development of highly accurate algorithms that can automate much of the task. In this post, I sketch one such algorithm that I recently worked on at UC Berkeley along with two Ph.D. students, a member of the security team at the Lawrence Berkeley National Laboratory (LBL), and a fellow UCB computer science professor. The research concerns detection of spearphishing and we published it last month at the USENIX Security Symposium, where it won both a Distinguished Paper award and this year’s Internet Defense Prize, a $100,000 award sponsored by Facebook.

As you probably know, spearphishing attacks are a form of social engineering where an attacker manually crafts a fake message (sent via email or social networking) targeting a specific victim. The message often includes carefully-researched details that improve the likelihood that the victim will believe the message is legitimate, when in fact it isn’t. We call this facet of spearphishing the lure. The message entices the victim to take some unsafe action (the exploit), such as providing login credentials to a fake web page posing as an important site (e.g., corporate GMail), opening a malicious attachment, or wiring money to a third party.

In our work, we collaborated closely with the cybersecurity team at LBL to tackle the problem of detecting spearphishing attacks that result in victims entering their credentials into fake web pages. The Lab – an enterprise with thousands of users – maintains rich and extensive logs of past network activity generated by Bro, and for real-time detection operates numerous Bro instances. (I worked at LBL when I first developed Bro in the mid-1990s, and the Lab has been a key user of it ever since.)

For our recent work, we drew upon LBL’s Bro logs of 370 million emails, along with all HTTP traffic transiting their border, and LDAP logs recording the authentications of Lab users to the corporate Gmail service. (To get a sense of the richness of Bro data, check out the information it provides for SMTP and HTTP.) We were also able to cross-reference with their security incident database to assess the accuracy of the different approaches we explored and developed. All of this data spanned 4 years of activity.

The key idea we leveraged was to extract all of the URLs seen in incoming emails and then look for later fetches of those URLs by Lab users. Such a pattern of activity matches that of a common type of credential spearphishing, where the lure is a forged email seemingly from a trusted party. The lure exhorts the recipient to follow a link to take some sort of (urgent) action. However, this activity pattern also matches an enormous volume of benign activity too. Thus, the art for successfully performing such detection is to find ways to greatly winnow down the raw set of activity matching the behavioral pattern to a much smaller set that an analyst can feasibly assess – without discarding any actual attacks during the winnowing.

In previous projects, I’ve likewise tackled some needle-in-haystack problems. I worked with students and colleagues on developing detectors for surreptitious communication over DNS queries and for attacks broadly and stealthily distributed across many source machines. From these efforts, as well as this new effort on detecting spearphishing, several high-level themes have emerged.

First, it is very difficult to apply machine learning to these problems. The most natural machine learning techniques to use “supervised” ML, require labeled data to work from. For spearphishing, this would be examples of both benign email+click instances and malicious ones, which comprise two separate classes. The ML would then analyze a number of features associated with email+click instances to find an effective classifier that, when given a new instance, uses that instance’s features to accurately associate it with either the benign or the malicious class.

While supervised ML can prove very effective for some problem domains, such as detecting spam emails based on their contents, for needle-in-haystack problems it runs into major difficulties due to the enormous “class imbalance”. For the spearphishing problem, for example, we can provide an ML algorithm with 370 million examples of benign email+click instances, but fewer than 20 malicious instances, since such attacks only very rarely succeed at LBL. In these situations, the ML will very often overfit to the class with very few members, emphasizing features among its instances (such as the specific names used in emails) that have no general power.

Detectors for highly rare attacks, whether or not based on ML, face another problem concerning the base rate of the attacks. A simple way to illustrate this is to consider a detector for email-based spearphishing that has a false positive rate of only one-in-1,000 (0.1%). When processing 370 million emails, this seemingly highly accurate detector will generate 370,000 false positives, completely overwhelming the security analysts with bogus alerts.

In working on past needle-in-haystack problems, I’ve come to appreciate the power of (1) not trying to solve the whole problem, but rather finding an apt subset to focus on, (2) devising extensive filtering stages to reduce the enormous volume of raw data to a much smaller collection that will still contain pretty much all of the instances of the (apt subset of the) activity we’re trying to detect, and (3) aiming not for 100% detection, but instead to present the analyst with high-quality “leads” to further investigate.

For our spearphishing work, the subset of the problem we went after was email-based attacks that involve duping the target into clicking on a URL, and for which the target indeed did wind up clicking. We framed our approach around an analyst “budget” of 10 alerts per day. That is, on average, on any given day our detector will not produce more than that many alerts. We set the number of alerts to 10 because the LBL security staff deals with a couple hundred monitoring alerts per day, so getting 10 more does not add an appreciable burden – assuming that alerts that are false positives are cheap enough to deal with, a point I return to below.

We then identified a set of features to associate with emails containing URLs and any subsequent clicks on those URLs. This part took a great deal of exploration – indeed, the entire project spanned two years of effort.

For the most part, the features draw upon the site’s history of activity. For example, for emails one feature is for how many days in the past a given email From name (e.g., “Vern Paxson”) was seen together with a given From address (e.g., “<vern@corelight.com>”). For clicked URLs, one example of a feature is how many clicks the domain that hosts the URL received prior to the arrival of the associated email. To detect spearphishing sent from already-compromised site accounts, we also analyze LDAP logs to correlate emails with the preceding corporate Gmail authentication used by the account that sent the email, drawing upon features such as how many of the site’s employees have previously authenticated from an IP address located in the same city as was used this time.

We wound up identifying eight such features (though it turns out we don’t use all of them together). For each email+click instance, we compute the values of the relevant features and score the combination in terms of how many previous instances were strictly less anomalous than it (i.e., had more benign values for every one of the features). Our detector then flags the instances with the highest such scores for the analyst to investigate further, staying within the budget of an average of no more than 10 alerts per day.

The detector has proven to be extremely accurate. In our evaluation of 4 years of activity, it found 15 of the 17 known attacks in LBL’s incident database. In addition, it found 2 attacks previously unknown to the site. It achieved this with a false positive rate less than 0.005%.

Finally – and this is critical – we measured how long it takes an analyst to deal with a false positive, and it turns out that the vast majority can be discarded in just a few seconds. This is because, for most of the false positives, it’s immediately clear just from their Subject line or their sender that surely they do not represent a carefully crafted spearphish. An attacker will not dupe a user into clicking on a link and typing in their credentials using a Subject line such as “DesignSpark – Boot Linux in a second” (an actual example from our study). As soon as an analyst scans that Subject line, they can discard the alert from further consideration. As a result, it typically takes an analyst only a minute or two per day to deal with the alerts from our detector.

In summary, our work showed that we can make significant strides towards combating spearphishing attacks by (1) cross-correlating different forms of network activity (URLs seen in emails, subsequent clicks on those seen in HTTP traffic, LDAP authentication) in order to (2) find activity that we deem suspicious because, for a carefully engineered set of features, the activity manifests a constellation of values rarely seen in historical data. We also, crucially, (3) aim not for 100% perfect detection, but to present a site’s analyst with a manageable volume of high-quality alerts to then further investigate, and (4) find that these investigations take very little time if the alert is a false positive.

This sort of detection underscores some of the major benefits Bro can provide: illuminating disparate forms of network activity, producing rich data streams that sites can archive for later examination and enabling analysts to zero-in on problematic behavior.

Version 18 of our software features the Encrypted Traffic Collection which focuses on SSH, SSL/TLS certificates and insights into encrypted network...

While I have used log collection and SIEM platforms to review Zeek transaction logs, it is not necessary to wait for a SIEM before collecting...

Zeek (formerly Bro) generates real-time data about network flows. But it can do a lot more, and in this blog series, we’ll highlight lesser-known...