Corelight data and LLMs

Accelerate alert analysis with Corelight’s LLM prompts for Suricata and Corelight data, featuring summaries, threat analysis, and next steps.

The explosion of interest in artificial intelligence (AI) and specifically large language models (LLMs) has recently taken the world by storm. The duality of the power and risks that this technology holds is especially pertinent to cybersecurity. On one hand the capabilities of LLMs for summarization, synthesis, and creation (or co-creation) of language and content is mind-blowing. On the other hand, there are serious concerns in the cyber realm not only around AI hallucinations, but also misuse of this technology for sowing misinformation, creating rocket fuel for social engineering, and even generating malicious code. Our Corelight co-founder, Greg Bell, lays out a nice analogy in a recent article for Forbes which you can dive into here.

Instead of focusing on the future of AI, I want to share some of the ways that Corelight has been thinking about and using AI in our NDR products today. Our approach is to leverage AI where it can make our customers more productive in their day-to-day security operations, and to do that in a way that is both responsible and respectful of our customer’s data privacy. Increasing SOC efficiency through the generation of better detections and faster upleveling of analyst skills goes directly to addressing the cybersecurity workforce challenges that every organization is struggling with.

The umbrella term AI covers all the capabilities of machine learning (ML), including LLMs. Corelight uses ML models for a variety of detections throughout our Open NDR Platform, both directly on our sensors as well as in our Investigator SaaS offering. Having this powerful capability at the edge and in the cloud allows our customers, whether deployed in air-gapped or fully cloud-connected environments, to harness the power of our ML detections.

From finding C2 channels to identifying malware, ML continues to be a powerful tool in our analytics toolbox. Our supervised and deep learning ML models allow for targeted and effective detections that minimize the false positives commonly associated with some other types of ML models. Our models can identify behaviors like domain generation algorithms (DGAs) which may indicate a host infection, watch for malicious software being downloaded, and identify attempts to exfiltrate data from an organization through covert channels like DNS. We also use deep learning techniques to identify URLs and domains that attempt to trick users into submitting credentials or installing malware, helping to stop attacks early in the life cycle.

Providing effective ML-based detections is only the beginning of our approach. Having the appropriate context and explainability around our detections is essential to faster triage and resolution. We provide detailed views into what is usually a “black box” of ML detection. Our Investigator platform provides an exposition of the features that make up the model, as well as the weightings that led to a specific detection. That data gives analysts a view into what specific evidence to pivot to for the next steps of an investigation.

We continually build new models and tune our existing ones to make sure that our customers are protected against the latest threats we see in the real world. We also are prototyping an anomaly detection framework which has broad applicability to a variety of behavioral use cases from authentication to privilege escalation while still providing a level of explainability that our customers have come to expect from Corelight.

In our experiments with LLMs, we became convinced early on that the power for summarization and synthesis of existing information was the best application for the current maturity of LLMs. We found their ability to create detections by discerning between legitimate and malicious network traffic weak in our initial tests, but validated that these language models can deliver powerful context, insights, and next steps to help accelerate investigation and educate analysts. We also benefit from our platform and resulting data being based on open-source tools like Zeek® and Suricata, which many commercial LLMs are already trained on. Since Corelight produces a gold standard, open data format for NDR we quickly delivered a powerful alert summarization and IR acceleration feature in our Investigator platform, driven by GPT.

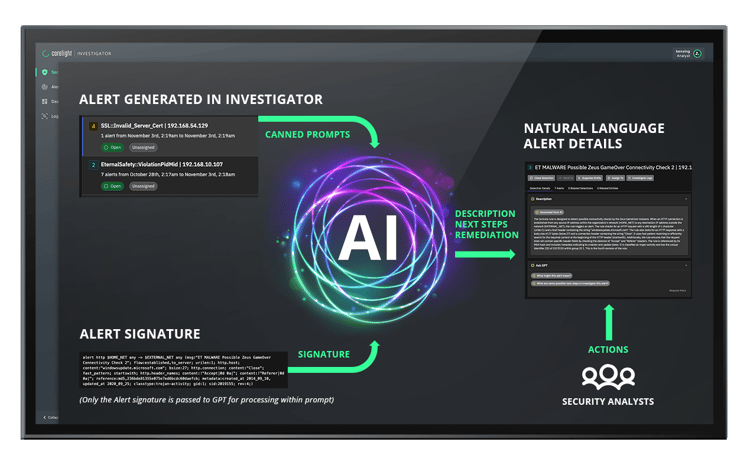

Here’s how it works:

Corelight researchers create, test, and validate a limited number of prompts focused around security alerts created by the Corelight platform. These vetted prompts are then used to query GPT based solely on the alert metadata (no specific customer data is sent) and the results are cached in our SaaS infrastructure. A customer who views an alert is automatically shown the GPT alert summary and can access potential next steps for investigating and remediating the alert simply by clicking a series of supplemental prompt “clouds.” Customers can also suggest additional prompts and make suggestions on how to improve the GPT output. No customer data is ever sent to GPT and no traffic from the customer’s site ever goes to GPT as this is handled by Corelight’s SaaS infrastructure.

We believe that by carefully controlling the prompts and responses for accuracy, and ensuring that no customer data goes to GPT, we have found a middle ground to provide great value from LLMs without compromising customer privacy. This approach has been consistently validated by our customers and the analysts and partners that we work with.

Summarization and providing next steps are a great start, and we are now beginning to experiment with adding additional context, including information about IOCs, MITRE ATT&CK® TTPs, threat actors and more, as well as continuing to educate security analysts about the best way to use Zeek and Corelight evidence to investigate and respond to alerts. With further fine tuning and specific knowledge of Corelight data, we’re finding that the models are becoming more capable of handling additional information and workflow steps. One consideration here is that, to make use of some of these additional features, we need to “cross the bridge” by having the models directly handle customer data—without access to the additional network evidence the models can’t assist. We’ve taken a conservative initial approach of not sharing any customer data with the LLMs. However, we anticipate that with the appropriate safeguards some customers may opt to share their data to gain access to a new suite of AI-enabled workflow accelerations.

While we began our LLM explorations with OpenAI's GPT, we continue to track the incredible growth in the market of new models and platforms built around LLMs coming from every corner of the tech industry. In addition to our work with GPT, we have built collaborative relationships with other LLM developers, providing an opportunity to influence and shape elements of their product development, such as the Microsoft Security Copilot private preview program, as discussed in this announcement.

ML detections and ML-assisted workflows are just a few of the ways that we are using AI in our products, but there is plenty more going on behind the scenes. Be on the lookout for many more exciting developments over the coming months focused around Corelight’s use of AI to make investigation workflows more efficient, generate more effective detections, and to help uplevel analysts' understanding of network data. In the meantime, you can learn more about our LLM integration in Investigator, discover how our use of ML enhances our analytics, and how it all fits together in our complete Open NDR Platform offering.

Accelerate alert analysis with Corelight’s LLM prompts for Suricata and Corelight data, featuring summaries, threat analysis, and next steps.

I’ve enjoyed meeting many companies and leaders in the Bay Area. The best was with Corelight (where I recently joined as their chief product officer).

Learn how Corelight’s Open NDR products and platforms help SOC teams identify ransomware blast radius.