- What is anomaly-based detection?

- What is the purpose of anomaly-based detection?

- The basics of anomaly-based detection data science: examples outside of the security realm

- What are the key differences between anomaly-based detection and signature-based detection?

- The benefits of anomaly-based detection

- Challenges of anomaly-based detection

- How Corelight improves detection by leveraging multiple detection options

What is anomaly-based detection?

Anomaly-based detection, sometimes known as behavior-based detection, is a method that uses data analysis and rules to help identify evidence of potential malicious activity in digital systems.

Unlike signature-based detection, which focuses on known indicators of compromise (IOC), anomaly-based detection does not search for known malware characteristics. Instead, it establishes a baseline for normal network behavior and, through continuous tuning and adaptation, identifies patterns that may be evidence of malicious activity.

Anomaly-based detection is not a standalone security solution. But it can inform a proactive threat-informed defense (TID) strategy, which also may include signature-based detection and hunting methods based on knowledge of adversaries’ tactics, techniques, and procedures (TTP). To be effective, the approach depends on experts who can make adjustments to its monitoring parameters based on experience and an ability to interpret data.

What is the purpose of anomaly-based detection?

The primary strategic objective of anomaly detection is to identify behaviors and attacks which are difficult to identify using other techniques. This typically means tactics that either blend into the "noise" of normal, or an attacker using TTPs that have never been seen before. Signatures and other behavioral detection may not catch them, but an approach looking for anomalies may be able to identify something unusual. Often anomaly detection can create more work for an analyst to run down due to the nature of how it works– anomalous does not equal malicious. So it is important to target specific use cases to make defense more effective.

Anomaly-based detection can also support security models such as Zero Trust Architecture and Secure Access Service Edge (SASE) that are gaining acceptance in many industries. These models do not specifically incorporate anomaly-based detection as a part of their solution, but their core objective of controlling identity and access is much easier to attain with a proactive, real-time detection method that monitors behavior rather than simply searching for known threat signatures.

Organizations that need to maintain compliance with industry data protections (e.g. HIPAA, GDPR, SOX, SOC 2) may also require a more rigorous and proactive detection that will depend on automated behavior-based monitoring. Whether or not compliance is an issue, however, any organization that relies on the access to, storage, and transfer of sensitive or proprietary information can and should seriously consider adding anomaly-based detection tools and methodologies to their detection arsenal.

Anomaly-based detection is sometimes described as a combination of the right tools, skill, science, and art. There is no right approach; every detection team will calibrate its methodology depending on the architecture of their systems and current cyber intelligence. The patterns it detects should provide teams with enough data to provide threat hunters with a reliable jumping-off point for a theory that can form the basis for an effective threat hunt. Anomalies can also be used as corroborating evidence for a "conviction" and can be the starting point for an alert in cases also.

Detection evolving from statistical analysis to ML data science

While security teams will depend on automated suppression filters and supervised learning algorithms to prioritize network events, the human element is still crucial to anomaly-based detection. Machine learning is a critical tool in the process, but it is not yet at the level where it can be truly predictive. Any anomaly-based intrusion method will rely in part on collections and the tools provided by vendors, context provided by network data and user behavior, and expert experience that weighs these different intelligence sources and adjusts them as needed.

Machine learning

In general, artificial intelligence, or AI, depends on access to data. The actual process of machine-learning can be supervised, meaning computer algorithms are supplied with a dataset that trains the system, using labeled and unlabeled data, and providing output target values in a “like with like” classification. Over time, data scientists can train the algorithm to detect patterns and label new, incoming data based on preset conditions (the “supervision”). Once the administrator establishes the model’s validity, it can evaluate new data sets and make classification decisions.

When applied to threat detection, data scientists can provide the algorithm with data associated with known threat intelligence, historical information, or collection. By also providing the model with normal local traffic packets, along with characteristics and patterns of known remote access tools (RAT) and general cyber threats, the model can start making predictions that go beyond simply recognizing known signatures and begin to evaluate the nature of anomalous behavior.

Machine learning models can also be unsupervised. In this case, the model learns from analysis of unlabeled data, that is, without any guiding input from administrators. While this method can be effective at detecting anomalies, it struggles to classify, or label, its findings.

Algorithms for training AI

There is no one data science approach that informs threat detection. Depending on the circumstances and their interpretation of the data, experts may vary their methods to achieve the optimal result—or at least avoid generating wrong or misleading results. In terms of anomaly detection, security teams may deploy a variety of algorithms to find the best approach for monitoring their systems.

The following general classifications may inform a machine-learning model:

Statistical algorithms. As the name implies, these algorithms rely on statistics and data aggregation techniques to discern patterns in data and generate predictions that can form the basis for automated or human decision-making. Examples include decision trees, random forests, logistic regression, and Naive Bayes.

Non-statistical algorithms. Instead of statistical patterns, these algorithms depend on rule sets and formal logic constructs to generate interpretations of data sets. Examples include knowledge graphs, if-then rule constructs, and systems based directly on human expertise.

Like most other approaches to AI-driven threat hunting, there are not always clear, practical distinctions with the algorithms in use. Security data scientists may deploy algorithms that are effective hybrids of both approaches.

Algorithms can also be classified in terms of specific methods of data aggregation and patterning, Examples include:

- Classification algorithms. Commonly used in supervised ML, these algorithms should develop a map between input data and output labels by training on the original dataset. The algorithm will begin to identify relational patterns in the data as training proceeds. Examples include decision trees, random forests, logistic regression, and k-Nearest Neighbors.

- Clustering algorithms. This type of algorithm creates subsets in which the data points share certain characteristics that distinguish them from data points in other sets. Useful in pattern recognition, clustering algorithms examples include K-means, hierarchical (bottom-up or top-down) clustering, and mean shift.

- Deep learning algorithms. Typically a form of unsupervised machine learning, deep learning is based on the cognitive function of the human brain and neural networking. As data is processed, the algorithmic function learns how to create representations that go beyond pattern recognition and solve more complex and anomalous problems. Examples include convolutional neural networks (CNN), recurrent neural networks (RNN), and long short-term memory networks (LSTM).

Key differences between anomaly-based detection and signature-based detection

Signature-based detection systems search for known characteristics (e.g., file hashes, file names, byte patterns, IP addresses, or domains). When there is a match the system (typically an intrusion detection system or a platform with intrusion detection capabilities) generates an alert. The approach is rule-focused and relatively simple to update.

Tuning a signature-based detection system is generally a simpler proposition than anomaly-based detection. Along with their speed and high accuracy, ease-of-use helps explain why many security teams may still prefer to use signature-based IDS over behavior-based systems. Given the expanding attack surface of most enterprises and the proliferation of modified and novel attack methods, there is a strong case to be made for adopting a behavior-based approach to complement signature-based detection.

By contrast, anomaly-based detection can hone in on any network activity that is not consistent with normal activity, which in theory can identify unknown tools or methods, including subtle modifications of known malicious characteristics that a signature-based detection would miss. As such, an anomaly or behavior-based model is capable of detecting zero-day attacks and anomalous activities that are unknown and therefore not included in data collections — provided the system is adequately tuned, and human operators have sufficient insight to interpret an alert as a genuine threat instead of a noisy alert.

Benefits of anomaly-based detection

Anomaly-based detection is not a new concept, but only in recent years has it become a practical model for most enterprises. Improvements in machine learning have made data processing and analysis at the necessary scale more practical for many security teams, provided they have sufficient time, expertise, and resources to commit.

Used in conjunction with other tools, anomaly-based detection can deliver a number of benefits to the enterprise:

- Building behavioral profiles. By leveraging supervised machine learning models, security experts can start to boil down raw traffic associated with malicious activity as well as normal network traffic and begin to isolate patterns that are specific to threats. Over time, security teams can more easily uncover artifacts associated with threats in progress or malicious activity missed in earlier investigations. Even when active threats are not uncovered, the added complexity and richness of the data can help teams understand where degraded network performance or misconfigurations may elevate risk or create vulnerabilities.

- Earlier detection of threats. When security teams are able to identify malicious activity at an early phase of the cyber kill chain, they increase the likelihood of mitigating damage or eradicating threats before attackers can complete their ultimate objectives. Supported by strong network evidence and advanced learning models, an anomaly-based approach can help detect unknown command and control (C2) traffic and even modified versions of known threats.

- Better performance over time. As an iterative model that should improve as machine learning capacity expands and security teams learn more about their networks’ baseline activity. The anomaly-based detection can adapt and flex as networks become more complex and keep pace with evolving threats. With regular evaluations and tunings, the system’s false positive rate should decline, and thereby help security teams focus on legitimate threats and network issues.

- Support for compliance and detection of privilege abuse or other unauthorized employee activity. As regulations on data storage and handling become more stringent, an anomaly-based detection system can help the enterprise detect potential policy violations, unauthorized access attempts, data removal, and other activities that could jeopardize compliance with industry regulation.

Challenges of anomaly-based detection

Tuning an anomaly-based detection system is a meticulous, iterative process. The system methods rely heavily on data science methods, machine learning models, and the expertise contained within a security operations center (SOC) to interpret the results. Used in conjunction with signature-based detections, and data collections of adversaries’ tactics, techniques, and procedures (TTP), anomaly-based detection can create an effective, modern approach to defense that identifies and anticipates attackers’ methods.

The primary challenge associated with anomaly and behavior-based anomaly detection is that there is no one approach — either automated, executed by humans, or any combination of the two — that will be effective against every threat in every circumstance. Even the most sophisticated ML tools at the service of the best security teams can produce false positives, false negatives, or indeterminate results. The value of this model is in its capacity to expand and become more effective as algorithms are tuned and machine learning models become capable of analyzing even larger data sets that genuine threats stand out from normal and benign abnormal network behavior.

The evolutionary challenge is compounded by the ongoing expansion of the enterprise. Migration to cloud workloads and remote/hybrid workforces have fueled this expansion, and malicious actors will continue to find new opportunities within these environments. Meanwhile, the most sophisticated adversaries will continue to find new ways of evading detection systems and blending in with normal traffic patterns.

Other challenges associated with implementing and maintaining anomaly-based detection systems include:

- Time and resource commitment. While security teams do not typically do the substantial work required to train algorithms, they often will need to commit time and data resources to tuning the model using feedback. The volume of data that must be collected and processed for the system to be effective may exceed an enterprise’s capacity, in terms of computing power, storage, and cost.

- Network performance. Some network monitoring tools introduce latency or reduce the efficiency or productivity of employees or processes. (Note: Corelight’s network detection and response sensors sit out of band and do not introduce latency).

How Corelight improves detection by leveraging multiple detection options

A threat detection and response system, particularly one that incorporates anomaly-based detection, will depend on the quality of network evidence. Going beyond indicator or signature matching and undertaking probing analysis depends on surfacing clues, in the form of network evidence, and using local context and threat frameworks. Without rich data, security teams must rely on published and enabled signatures to inform their defense, leaving them vulnerable to zero-day threats.

Corelight’s solution supplies rich network telemetry that can make investigations speedier and more accurate, while also helping security teams move beyond reactive postures and into a proactive threat hunting mode. By coupling Suricata signature-based alerts with behavioral and ML detections, and comprehensive network evidence from Zeek® logs and our Smart PCAP tool, the Corelight Open NDR Platform not only expedites threat response but also provides analysts with the means to refine threat hunting capacities over time.

Threat detection remains a mix of art, science, and tools that put machine learning at the service of human defenders. The key element is the quality of historical and real-time data. With Corelight, security teams can continue to access the data they generate even when they migrate to a new security platform.

Learn more about Corelight’s intrusion detection approach and open platform capabilities.

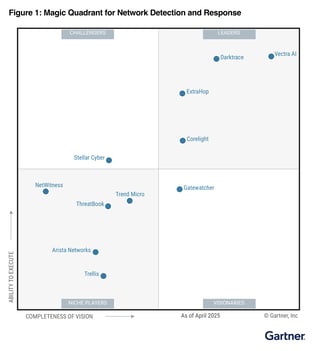

Corelight recognized as a Leader in the 2025 Gartner® Magic Quadrant™ for NDR

Recommended for you

Book a demo

We’re proud to protect some of the most sensitive, mission-critical enterprises and government agencies in the world. Learn how Corelight’s Open NDR Platform can help your organization tackle cybersecurity risk.