Investigating the effects of TLS 1.3 on Corelight logs, part 2

In part 1, I showed how Corelight would produce logs for a clear-text HTTP session. In part 2, I perform the same transaction using TLS 1.2.

This post is part of a multi-part series on encryption and network security monitoring. This post covers a brief history of encryption on the web and investigates the security analysis challenges that have developed as a result.

I’ve been hearing this message since the late-2000s, and wrote a few blog posts about network security monitoring (NSM) and encryption in 2008. I’ve learned to recognize that encryption is a potentially vast topic, but often a person questioning the value of NSM versus “encryption” has basically one major use case in mind: Hypertext Transfer Protocol (HTTP) within Transport Layer Security (TLS), or Hypertext Transfer Protocol Secure (HTTPS).

Those worrying about NSM vs encryption usually started their security career when websites mainly advertised their services over HTTP, without encryption. Gmail, for example, has always offered HTTPS, but only in 2008 did it give users the ability to redirect access to its HTTPS service if they initially tried the HTTP version. In 2010, Gmail enabled HTTPS access as the default.

Today, Google strives to encrypt all of its web properties, and the HTTPS encryption on the web section of Google’s Transparency Report makes for fascinating reading. Unfortunately, properly implementing HTTPS seems to be a challenge for most organizations, as shown by the prevalence of “mediocre” and outright “bad” ratings at the HTTPSWatch site. The 2017 paper Measuring HTTPS Adoption on the web offers a global historical view that is also worth reading.

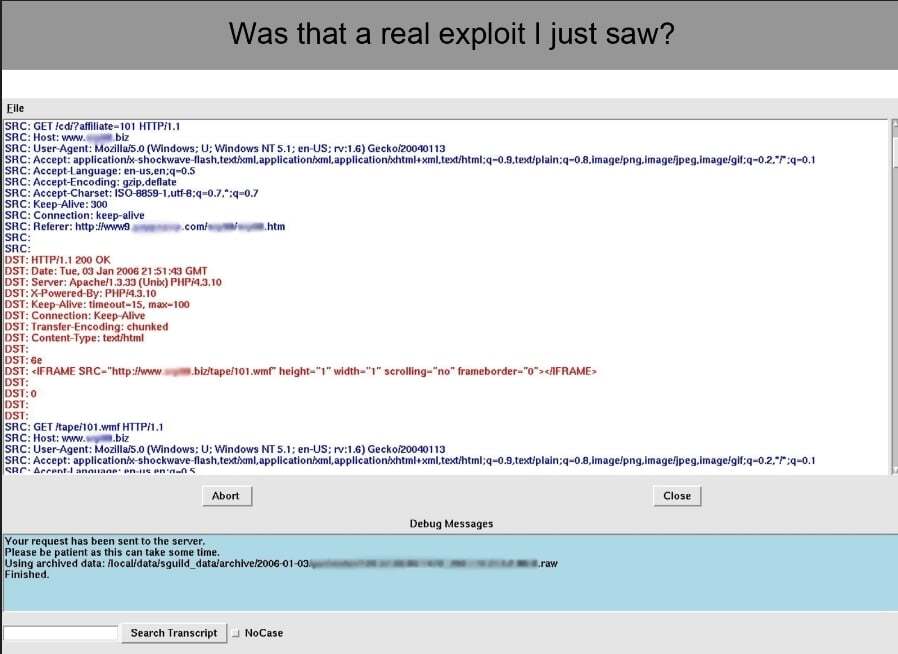

Prior to widespread adoption of HTTPS, security teams could directly inspect traffic to and from web servers. This is the critical concern of the “encryption killed NSM” argument. For example, consider this transcript of web traffic taken from a presentation David Bianco and I delivered to ShmooCon in 2006. (Incidentally, when we spoke at this conference, it was the first time we had ever met in public!) David investigated a suspected intrusion, and was able to systematically inspect transcripts of web traffic to confirm that a host had been attacked via malicious content but not compromised. (His original blog post is still online.)

Using the Zeek network security monitor (formerly “Bro”), we could have produced similar analysis using the conn.log, the http.log, and possibly the files.log.

Because David could see all of the activity affecting the victim system, and directly inspect and interpret that traffic, he could decide whether it was normal, suspicious, or malicious.

Encryption largely eliminates this specific method of investigation. When one cannot directly inspect and interpret the traffic, one is left with fewer options for validating the nature of the activity. Encryption, however, did not introduce this problem. One could argue that modern web technologies have rendered many web sites incomprehensible to the average security analyst.

Consider the “simple” Google home page. Looking at the page in a web browser, it looks fairly simple.

Inspecting the source for the web page shows a different story: over 33 pages, or nearly 100,000 characters, of mostly Javascript code.

How could any security analyst visually inspect and properly interpret the content of this page? I submit that the very nature of modern websites killed the security methodology that allowed an analyst to manually read web traffic and understand what it meant. Yes, tools have been introduced over the years to assist analysts, but the web content of 2018 is vastly different from that of 2006.

Even if modern websites were unencrypted, they are generally beyond the capability of the average security analyst to understand in a reliable and repeatable manner. This means that without encryption, security teams would need alternatives to direct inspection and interpretation to differentiate among normal, suspicious, and malicious activity involving web traffic. In the next article I will discuss some of those alternative models, placed within the context of HTTPS. I will likely expand beyond HTTPS in a third post. Please let me know if you want to see me discuss other aspects of this problem as well in the comments below or over on Twitter. You can find me at @taosecurity.

Richard Bejtlich - Principal Security Strategist, Corelight

In part 1, I showed how Corelight would produce logs for a clear-text HTTP session. In part 2, I perform the same transaction using TLS 1.2.

In this post I will use Zeek logs to demonstrate alternative ways to analyze encrypted HTTP traffic.

In this first of three parts, I will introduce TLS and demonstrate a clear-text HTTP session as interpreted by Corelight logs.