Executive summary:

- AI is changing how organizations think about every aspect of how they work, who they partner with, and how they must protect their most valuable assets.

- Cyber attackers are using AI to launch more sophisticated attacks. Defenders, in turn, must use AI-based tools across their security portfolio to help them proactively hunt for threats, analyze complex data sets, and accelerate and automate decisions.

- The rapid rate of change may add to misunderstanding and confusion around AI capabilities. It’s important to understand how AI tools are advancing cyber defense and response capabilities, to ensure organizations are getting the most value out of their vendor relationships and the tools they invest in.

Artificial intelligence (AI) is affecting every industry and enterprise in the near and long term. The cybersecurity industry has been an early adopter and testing ground for many AI use cases that help define the possibilities and challenges the technology delivers.

Since cyber criminals are leveraging many of the same tools, it is of critical importance to organizations across the public and private sectors that cybersecurity professionals stay on the edge of AI’s development, whether they are building new tools and processes to detect intrusions, analyzing alerts, or educating other professionals about the enterprise’s changing threat landscape.

The utility of AI in cybersecurity has expanded due to several factors. The proliferation of devices, remote connections, cloud deployments, and complex supply chains has vastly increased the attack surface of most organizations.

The data generated by this expanded surface in turn leaves security operations centers (SOC) struggling to monitor and prioritize a growing flood of alerts and feeds, compounding the threat of missed alerts and the undetected presence of attackers, who may persist undetected in key systems.

Heavy workloads and a significant talent gap leave cybersecurity teams in a double bind. Analysts can be locked down in repetitive tasks that do little to help these professionals learn new skills and deepen their experience and value to their organizations. Meanwhile, budget cuts, hiring freezes, and an insufficient number of developing professionals in educational pipelines have helped the talent gap to persist, even as the gross size of the cybersecurity workforce expands. One study found a year-over-year increase of 8.7% in the number of cybersecurity professionals, yet the gap between worker demand and availability grew faster (12.6%).

Despite these challenges, it can be difficult for enterprises to choose which investments will best support their business objectives and SOC teams while also creating defenses that best mitigate their cyber risk and assist in compliance with evolving industry regulations and standards.

To make decisions about where and how to invest in AI-powered cybersecurity technology, it can be helpful to assess the current state of the technology and where it can provide security teams and the enterprise with a strong return on investment and improved cyber defense.

The core definitions of AI

Artificial intelligence is a generalized term for the field and tools that rely on machine-based intelligent systems. It has developed over decades, with recent significant advancements. It encompasses other terms that have come into common use over the past two decades, including machine learning (ML), deep learning (DL), large language models (LLM), and neural networks.

In the cybersecurity field, AI can significantly enhance the capabilities and response times of highly skilled (and continuously learning) professionals. AI can be a critical extension of the SOC toolset.

As a continuum that is still in progress, the use of AI in cybersecurity can be broken out into categories that define its functionality. While this is sometimes mapped to the NIST Cybersecurity Framework (Identify, Protect, Detect, Respond, Recover), instead, below, these are mapped to a typical defense lifecycle, which aligns more closely with a typical SOC’s needs and activities.

Threat detection and analysis. Using AI to spot malicious activity faster and more accurately than traditional detection engines (these can include signature-based, search, and other tools).

Threat hunting and investigation. Helping human SOC analysts proactively search for undetected threats and attacks.

Incident response and containment. Accelerating how quickly security teams can react to and remediate an attack.

Predictive and preventive security. Anticipating and preventing future attacks, including topics such as risk assessment and attack surface management.

Security operations optimization. Improving SOC efficiency and reducing analyst fatigue.

Four reasons why AI is necessary to cybersecurity

The talent shortage and overwork of SOC teams are ongoing, chronic problems that lead to inefficient use of resources, critical gaps in enterprise security, and analysts who lack the time and tools to hone their skills and develop new capabilities.

| Reasons for AI | Details |

|---|---|

|

Keep the pace |

Even in organizations that enjoy sufficient resources and cybersecurity talent, the volume and complexity of the datasets created by expanding networks, new endpoints, and increasingly complex supply chains and working environments have outstripped the analytic capabilities of humans. To keep pace with the developments in a digital marketplace, security teams and IT teams in general must harness the power of AI to confront this challenge. |

|

Fight fire with fire |

The rapidly developing capabilities of adversaries makes the need for AI assistance even more urgent. Like a conventional military arms race, cyber attackers and defenders have the capability to make use of a neutral, extremely powerful technology. All that is certain is that the attackers, as they have since the beginning of the Internet, will make use of any tool that helps them achieve their objectives. Increasingly, those tools have incorporated the power of AI and machine learning. Defenders must anticipate this and fight fire with fire. |

|

Executed with far less effort |

The attackers leveraging AI benefit from a lower barrier to entry. Reconnaissance and intrusion techniques that required advanced skills can now be executed with far less effort. AI can assist in complex distributed denial of service (DDoS) attacks, brute forcing of credentials, accelerated data exfiltration, vulnerability detection, observation of network traffic, and the establishment of command and control (C2) channels, to name only a few methods. |

|

Prevent model poisoning |

Furthermore, attackers can focus on the AI tools defenders use, and potentially corrupt training data to skew outputs. Model poisoning has far-reaching implications for business at large; in the cybersecurity space, it could result in an attacker manipulating algorithms with the intention of making their activity appear normal or obscuring activity that uncorrupted models might detect. This reality underscores the need for SOCs to attain proficiency in monitoring the AI-powered tools they use. The tools themselves expand the enterprise’s attack surface. |

AI-powered cybersecurity tools will continue to improve over time

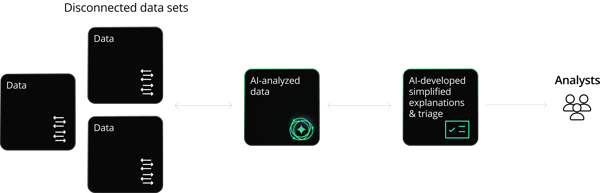

Artificial intelligence is a rapidly scaling, iterative process. It trains models and refines them over time by accessing multiple, complex datasets.Like mostdisciplines, cybersecurity is quickly adopting AI to streamline disparate and unconnected datasets. This allows for quick and easy-to-understand access to threat detections and their associated evidence, facilitating both real-time and forensic analysis.

AI cybersecurity tools are essential to connecting data repositories that can then be integrated and synthesized. For the SOC already using AI, this can lead to a more comprehensive understanding of an organization’s threat landscape, its normal traffic patterns, and adversarial behavior during or after a cyber event.

The development of AI cybersecurity tools is also a function of a larger ecosystem. Purveyors of network security, cloud security, attack frameworks, and other security functions can drive integrations and partnerships that provide analysts on the ground with better integrations, dashboards, and event context, which can improve over time in a mutually reinforcing matrix.

AI use cases for network security

The impacts of AI on cybersecurity are too numerous and widespread to review in a single framework. Moreover, the technology is developing at such a rapid pace that some use cases that were not practical just a year ago are already in use today and likely to become increasingly relevant within the next few years. That said, use cases involving AI that are directly relevant to today’s SOC security challenges include:

AI-driven threat detection

AI/ML-based detections as part of a multi-layered threat detection architecture enable not only better and more accurate detection, but also enables proactive threat detection in the SOC. Capable of finding evasive threats and threats missed by traditional detection methodologies, by using AI-based technologies like supervised machine learning to detect similarities and patterns in indicators of compromise (IOCs). The use of AI can detect both new, novel threats as well as threats that have been previously seen.

Anomaly detection is a popular AI-based technology that is enabling proactive threat hunting. Analysts can use unsupervised machine learning to analyze larger and more comprehensive data sets to gain a better understanding of baseline behavior in their networks and other environments, and detect and identify unusual and anomalous behavior. With a richer understanding of the threat landscape, SOC analysts will be better prepared to anticipate or identify novel attack patterns.

AI-powered workflows

- Expert-authored workflows combining AI, LLM, and network context to deliver:

- AI Assistance with log summaries, response guidance, policy helpers, chat, NLQ (natural language queries), giving SOC analysts an opportunity to uplevel their skills. This is an important byproduct of streamlined workflows. Successful implementation of AI-powered tools will depend on analysts who have had the time to build skill sets that include management of those tools. AI’s capacity for synthesizing data and making complex material digestible can be an important element in the education and maturation of junior SOC analysts.

- AI Triage offeringcorrelation, investigation, verdicts, and findings summaries. AI can streamline workflows and enable automation in the SOC, as well as provide improved mean times for detection and response to cyber threats. Diving into logs and triaging alerts can be an extremely time-consuming process, and also inefficient if a SOC analyst lacks experience that can help them prioritize and focus their efforts. A machine learning algorithm can provide relevant context or a summation of a dataset, and relieve SOC analysts of a great deal of repetitive remediation effort.

- AI Investigation enables powerful searches for IOCs, entities, 3rd party alerts, and A2A questions. AI-powered platforms offer valuable tools to provide context to alerts and suggest possible responses to analysts. Making use of machine learning tools like large language models (LLM) can help power investigations, condense a complex process into a set of actionable next steps in clear language, automate alert scoring and prioritization, and improve mean time to detection (MTTD) and mean time to respond (MTTR).

AI-enabled ecosystem

- Helps to identify and mitigate risks faster across the entire security ecosystem. SOCs should make sure their solution provides structured, context-rich network data available in open-source standards already understood by LLMs, and designed to feed seamlessly into SIEMs and AI / ML pipelines—out of the box. This integration is often provided by newer ecosystem technologies by including MCP server capabilities and LLM promptbooks.

Considerations for AI and cybersecurity

AI’s power and rapid development may cause some concern for organizations. While AI-based tools are quickly becoming a necessity in the SOC, these tools can elevate organizational risk related to misuse (by malicious actors or employees) and unrealistic expectations. It is important to balance the need for AI assistance in cybersecurity while being aware of some of the negative implications AI may have on the organization.

Key security considerations for AI adoption in the SOC include:

- How the models use data. There has been extensive discussion about the origins of data used for the training and tuning of AI models. Organizations will need to make careful decisions about what data they make available and whether or not they can remain compliant with industry standards and business priorities. When deploying AI-powered security solutions, the organization should evaluate a vendor’s approach to transparency regarding the construction of their models and its inputs, as well as the vendor’s risk management framework.

- Oversight of outputs. AI hallucinations, data poisoning, and data modification can be serious concerns for any AI use case. The value of employees with skills for evaluating AI outputs, along with the explainability of AI-based detections, will continue to rise.

- Plugging into a virtuous feedback loop. Customer experience with AI models has the potential to greatly improve the performance of AI-powered tools. Platforms that include safeguards can help create a system for ongoing model tuning and refinement without unnecessary exposure of proprietary customer data. Additionally, tool and platform vendors connected to a wide ecosystem of partners and evidence sources can deliver force multipliers to detection and response capacities.

- Level setting of expectations. AI already warrants a “game changer” description, but it is important to remember AI still has some limitations, and is still an additional tool in the arsenal, and not a replacement for the existing tools used by the SOC.

How Corelight leverages AI in its NDR Platform

Corelight has been a leader in the development and implementation of AI-powered platforms that give defenders the tools for defense-in-depth without compromising company data. Our Open NDR Platform leverages powerful machine learning and open source technologies that can detect a wide range of sophisticated attacks and provide analysts with context to interpret security alerts, including LLMs such as ChatGPT. Our approach delivers significant contextual insights while maintaining customer privacy: No proprietary data is sent to LLMs without the customer’s knowledge and authorization.

Corelight believes that AI toolsets will deliver pre-emptive detection, investigation and remediation—enabling SOCs to eliminate T1 workflows and up-level existing analysts to T2/T3 - where human expertise and judgment are best applied.

Corelight’s AI platform encompasses the three primary use cases for AI in cybersecurity, AI-driven threat detection, AI-powered workflows and the AI-enabled ecosystem. These use cases are all backed by forensic-grade network evidence—plus context, gathered in real-time across on-premise, hybrid, and multi-cloud environments.

Our use of Zeek® and Suricata, as well as partnerships with CrowdStrike, Microsoft Security, and other security consortia, delivers the double benefit of maximized visibility and high-quality contextual evidence that has helped us expand our offerings of supervised, unsupervised, and deep learning models for threat detection.

Corelight offers the GenAI Accelerator Pack to seamlessly integrate with the organization’s ecosystem. The pack includes a Model Context Protocol (MCP) Server, Analyst Assistant Promptbooks, and Investigation Promptbooks, combining industry-standard network evidence with the power of large language models (LLMs) to accelerate and enhance security operations center (SOC) workflows.

At Corelight, we’re committed to transparency and responsible stewardship of data, privacy, and AI model development. We help analysts automate workflows, improve detections, and expand investigations via new, powerful context and insights. We encourage you to keep current with how our solutions are optimizing SOC efficiency, accelerating response, upleveling analysts, and helping to mitigate staffing shortages and skill gaps.

Keep current with Corelight’s advances through our blog and resource center.

Book a demo

We’re proud to protect some of the most sensitive, mission-critical enterprises and government agencies in the world. Learn how Corelight’s Open NDR Platform can help your organization mitigate cybersecurity risk.